Resources:

Task 1: State dependent detection probability

We have

From this we can see that only when is a constant.

Since .

Otherwise, when is non-constant we have .

Task 2: The PDAF event probabilities

a)

We have a hit or miss type of probability over several samples, therefore a binomial distribution is logical.

b)

We can approximate this by

We want to prove

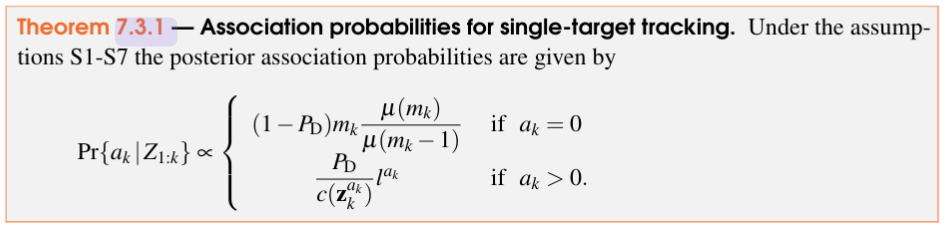

Starting from

Starting with the case where , and knowing that , we get:

While the case where , and we know that

- , due to it being Gaussian-linear

we get:

Since we’re only looking at the proportionally sign, we can remove as it is a factor independent of . We therefore get

c)

Same as the last task, but we swap from using the Poisson distribution to the binomial distribution found in a). Skipping some messy algebra, we get

Q.E.D

d)

We know from b) that , and from the hint we can rewrite the distribution in to become

Which is the exact same distribution that we found in b). This means that our approximation is good when is large and is small.

Task 3: IPDA vs PDAF

Resources:

a)

[!Task description] Briefly discuss why the posterior becomes a mixture in single target tracking. In particular, what is the interpretation of a component and its weight. What are the main complicating factors of this mixture?

In single target tracking we have several measurements, and we do not know which one is the correct one. We therefore have to consider a mixture of these (Gaussian) measurements where each measurement is weighted by the probability of it being the correct measurement.

b)

[!Task description] What problem does the IPDA try to solve that the PDA does not? Are there any problems that the PDA solves which the IPDA does not solve?

The PDAF assumes the target is always present. Its main focus is figuring out which measurements actually come from the target and which ones are just noise or false alarms. This makes it highly accurate and effective when the target is definitely there.

The IPDA extends this, and considers that the target might disappear over time, keeping track of the probability that the target actually exists. This means IPDA can “kill” them when it’s no longer there, making it useful for handling more complex tracking scenarios.

Therefore, the PDAF does not solve any problems that the IPDA doesn’t already solve.

c)

A complicating factor of using IPDA instead of the PDAF is another tuning variable, (the probability that the target survives), and the extra compute needed to update this variable.

Task 4: Implement a parametric PDAF

a)

The validation gate is given by

This is done by updating the variable condition to be

from scipy.spatial.distance import mahalanobis

condition = mahalanobis(z, z_est_pred.mean, np.linalg.inv(z_est_pred.cov)) ** 2 < self.gateb)

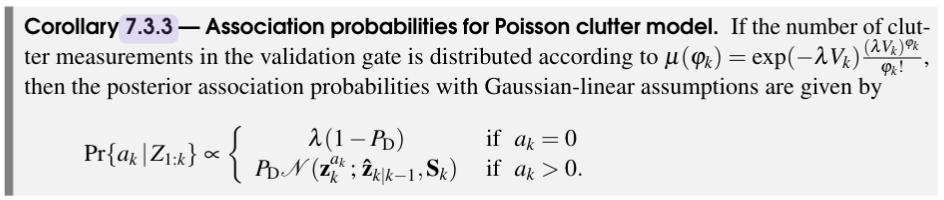

The formula we want to implement is given by

This is done by updating the variable assoc_probs

def get_assoc_probs(self, z_est_pred: MultiVarGauss[MeasPos],

zs: Sequence[MeasPos]) -> np.ndarray:

"""Compute the association probabilities.

P{a_k|Z_{1:k}} = assoc_probs[a_k] (corollary 7.3.3)

Hint: use some_gauss.pdf(something), rememeber to normalize"""

lamb = self.sensor_model.clutter_density

P_D = self.sensor_model.prob_detect

assoc_probs = np.empty(len(zs) + 1)

assoc_probs[0] = lamb * (1 - P_D)

for i, z in enumerate(zs):

assoc_probs[i+1] = P_D * z_est_pred.pdf(z)

assoc_probs /= np.sum(assoc_probs)

return assoc_probsc)

We want to update based on the update rule

This is done by updating the variable x_ests

def get_estimates(self,

x_est_pred: MultiVarGauss[StateCV],

z_est_pred: MultiVarGauss[MeasPos],

zs_gated: Sequence[MeasPos]

) -> Sequence[MultiVarGauss[StateCV]]:

"""Get the estimates corresponding to each association hypothesis.

Compared to the book that is:

hat{x}_k^{a_k} = x_ests[a_k].mean (7.20)

P_k^{a_k} = x_ests[a_k].cov (7.21)

Hint: Use self.ekf"""

x_ests = []

gauss_ak0 = x_est_pred

x_ests.append(gauss_ak0)

for z in zs_gated:

x_est_upd = self.ekf.update(x_est_pred, z_est_pred, z)

x_ests.append(x_est_upd)

return x_estsd)

Here we want to combine the previous tasks as well as some equations from the book.

def step(self,

x_est_prev: MultiVarGauss[StateCV],

zs: Sequence[MeasPos],

dt: float) -> tuple[MultiVarGauss[StateCV],

MultiVarGauss[StateCV],

MultiVarGauss[MeasPos],

set[int]]:

"""Perform one step of the PDAF."""

x_est_pred, z_est_pred = self.ekf.pred(x_est_prev, dt) # Hint: (7.16) and (7.17)

gated_indices, zs_gated = self.gate_zs(z_est_pred, zs) # Hint: (7.3.5)

assoc_probs = self.get_assoc_probs(z_est_pred, zs_gated) # Hint: (7.3.3)

x_ests = self.get_estimates(x_est_pred, z_est_pred, zs_gated) # Hint: (7.20) and (7.21)

weighted_mean = 0

weighted_covariance = 0

for prob, estimate in zip(assoc_probs, x_ests):

weighted_mean += prob * estimate.mean

for prob, estimate in zip(assoc_probs, x_ests):

mean_deviation = estimate.mean - weighted_mean

weighted_covariance += prob * (estimate.cov + np.outer(mean_deviation, mean_deviation))

x_est_upd = MultiVarGauss(weighted_mean, weighted_covariance)

return x_est_upd, x_est_pred, z_est_pred, gated_indicesTask 5: Tune your parametric PDAF

a)

The approach of increasing the gate probability when the estimated acceleration noise is high is an approach that works, however the state estimations are less accurate this way.

b)

Makes the tracking worse, and at some point it will diverge.

c)

If one does not have access to any ground truth states, it is impossible to detect this behaviour.

(However, it could be detected if the object were placed in a known position over time (and not moving), and looking for divergence. This is not possible since we don’t have GT).

d)

Using IMM and better tuning.