Problem 1 (25%) Second-Order Necessary Conditions

a)

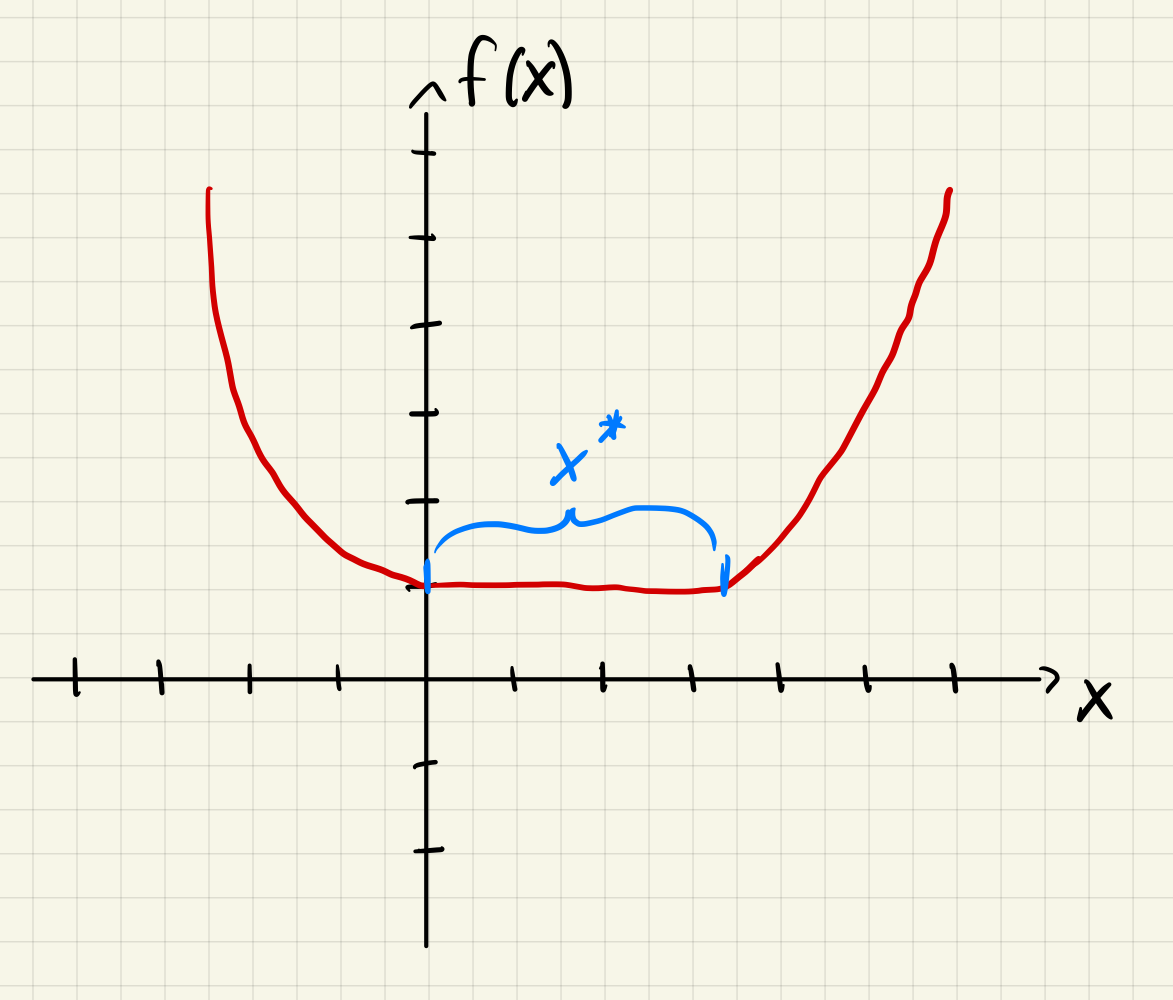

Theorem 2.3

If is a local minimizer of and exists and is continuous in an open neighborhood of , then and is positive semidefinite.

Link to original

A visual representation of theorem 2.3

b)

If we think about the scalar case this is similar to what we learned in R2. When the second derivative, , is greater than or equal to zero, , we have a minima which is not necessarily strict.

c)

Since the Hessian is not strictly positive (positive semi-definite instead of positive definite), we can not guarantee a strict local minimum.

Problem 2 (40%) The Newton Direction

Consider the model function based on the second-order Taylor approximation

a)

We find the Newton direction by finding the direction that minimizes the function

We find

Since the Hessian of is symmetric we get

We now need to find the minima (set the equation above equal to zero), giving us

Q.E.D

b)

The rate of change is given by , which gives us

Meaning that the sign of the rate of change depends on the sign of

- If the the Leading Principle Minors are negative, we do not have a descent direction.

- If they are mixed we cannot say if is a descent direction.

- If they are we have a singularity, and it is not invertible, meaning that does not exist.

c)

Given the following unconstrained minimization problem with objective function

Where and .

We find the minimizer of

Where the Hessian is given by

Which gives

Iterating gives

Which means we always reach the optimum in one step

d)

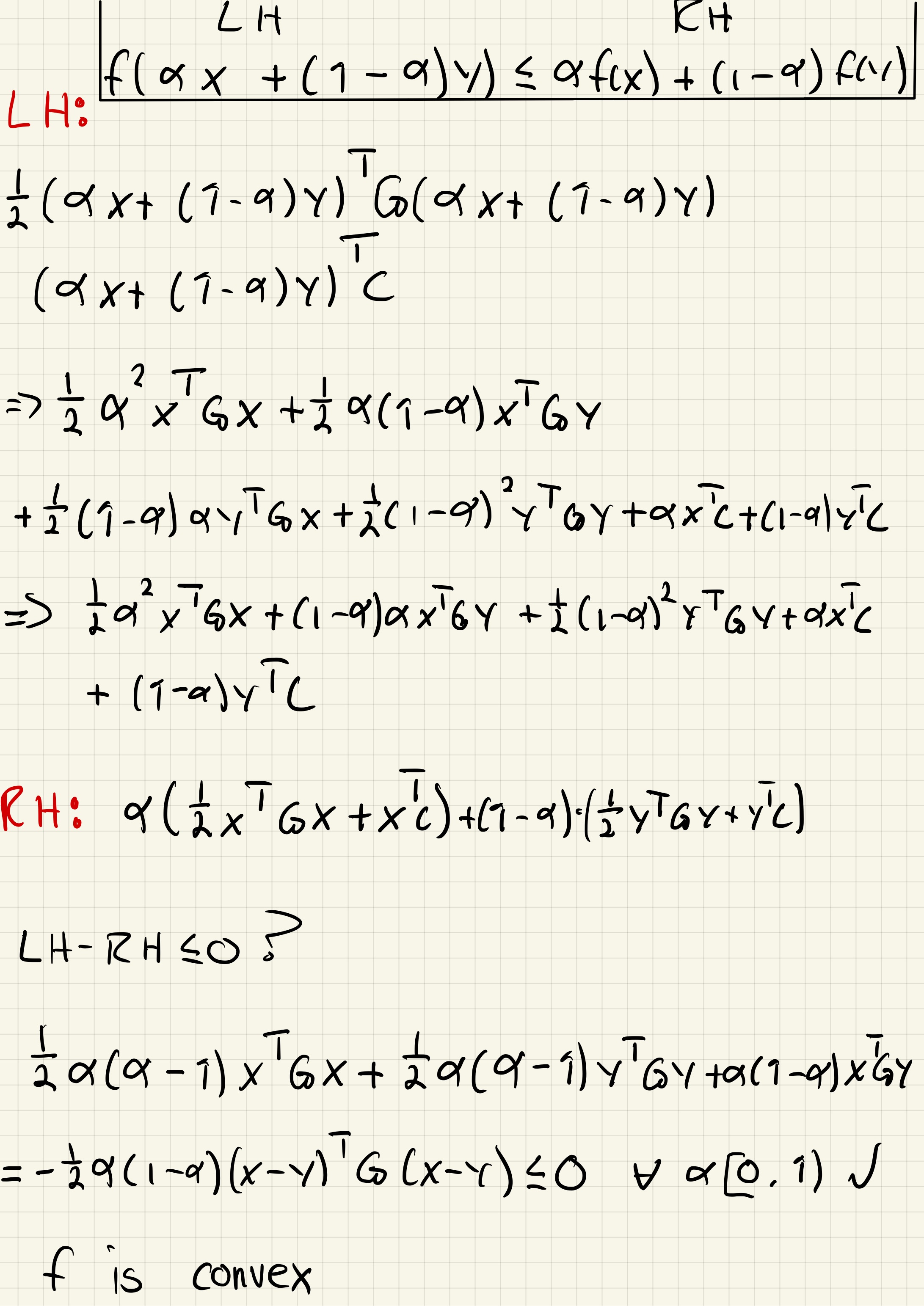

We quickly see that the domain, is convex.

We need the following to be satisfied for the function to be convex

Link to original

We see that is convex for all values of due to having only positive Leading Principle Minors.

Problem 3 (35%) The Rosenbrock Function

This problem can be found here:

Problem

Compute the Gradient and Hessian of the Rosenbrock Function

Link to originalShow that is the only minimizer of this function, and that the Hessian matrix at that point is a Positive-Definite Matrix.

The Gradient is given by

And the Hessian is given by

We then solve to find the minimizer

The second equation gives us

Putting this into equation one gives

Meaning that is the only minimizer of the Rosenbrock Function.

The Hessian at this point is given by

We check the Leading Principle Minors

Since both are strictly positive, the Hessian is positive definite at .