Problem 1 (60%) Open-Loop Optimal Control

We have the model

The process has been at , , but at a disturbance moves it to . We wish to solve a Finite Horizon LQR () with the cost (or objective) function

Where (for the time being), and

a) Eigenvectors and Eigenvalues

For a discrete, causal system to be stable, we need to have eigenvalues with absolute value smaller than .

Using WolframAlpha we get that the eigenvalues of are

The system is therefore stable.

b)

is a vector, while is a matrix for the dimensions to match

If we set , we can rewrite the cost function as

We now need to find and .

We know that

Which means that we can write as

Note that the reason that we have in the bottom left is due to the general form of LQR has a in front.

Similarly we can write as

c)

When we have as a objective function, we need to look at and to determine if the minimization problem is convex. Since is a Positive-Semidefinite Matrix, and is a Positive-Definite Matrix, the QP is convex.

If also was a Positive-Definite Matrix, then the problem would be strictly convex.

In other words, convexity depends solely on and .

d) Equality-Constrained Quadratic Programs

We will now cast the optimal control problem as a equality-constrained QP.

Each row in the constraint represents the state equation for a time instant. That is the reason for the structure given.

With the same reasoning we can define as

We know that the KKT Matrix for Equality-Constrained QPs is given as

Link to originalWhere , , and .

We can solve the open-loop optimal control problem with the following Matlab script.

% Define the system dynamics matrices

A = [0, 0, 0;

0, 0, 1;

0.1, -0.79, 1.78];

B = [1; 0; 0.1];

C = [0, 0, 1];

% Initial state

x0 = [0; 0; 1];

% Set the control horizon

N = 30;

% Determine the number of states and controls

nx = size(A, 2);

nu = size(B, 2);

% Define the cost matrices

Qt = 2 * diag([0, 0, 1]);

Rt = 2;

I_N = sparse(eye(N));

Q = sparse(kron(I_N, Qt));

R = sparse(kron(I_N, Rt));

G = blkdiag(Q, R);

% Construct the equality constraint matrices

Aeq_c1 = sparse(eye(N * nx)); % Identity matrix for x

Aeq_c2 = sparse(kron(diag(ones(N-1, 1), -1), -A)); % Shifted dynamics matrix

Aeq_c3 = sparse(kron(I_N, -B)); % Control dynamics

Aeq = [Aeq_c1 + Aeq_c2, Aeq_c3];

beq = sparse([A * x0; zeros((N - 1) * nx, 1)]);

% Initialize zero matrices for KKT system formulation

zero_m = sparse(zeros(N * nx));

zero_v = sparse(zeros(N * (nx + nu), 1));

% Assemble the KKT system

KKT_mat = [G, -Aeq';

Aeq, zero_m];

KKT_vec = [zero_v; beq];

% Solve the KKT system for optimal trajectory

KKT_sol = KKT_mat \ KKT_vec;

% Extract solution for plotting

z = KKT_sol(1:N * (nx + nu)); % Solution vector

y = [x0(3); z(nx:nx:N * nx)]; % Extract y_t from solution

u = z(N * nx + 1:end); % Extract control inputs

% Correct the time vector to include the initial step for plotting

t = 0:N; % Now t ranges from 0 to N, making it have N+1 elements

% Plot adjustments to match the corrected time vector

figure(1);

subplot(2, 1, 1);

plot(t, y, '-ko'); % Plot y_t over the control horizon, ensuring t and y have the same length

grid on;

ylabel('y_t');

title('Optimal Trajectory');

% Assuming u is plotted correctly against t-1 in the original code

subplot(2, 1, 2);

plot(t(1:end-1), u, '-ko'); % Plot control inputs over the control horizon, excluding the last element of t to match u's length

grid on;

xlabel('Time step, t');

ylabel('Control input, u_t');

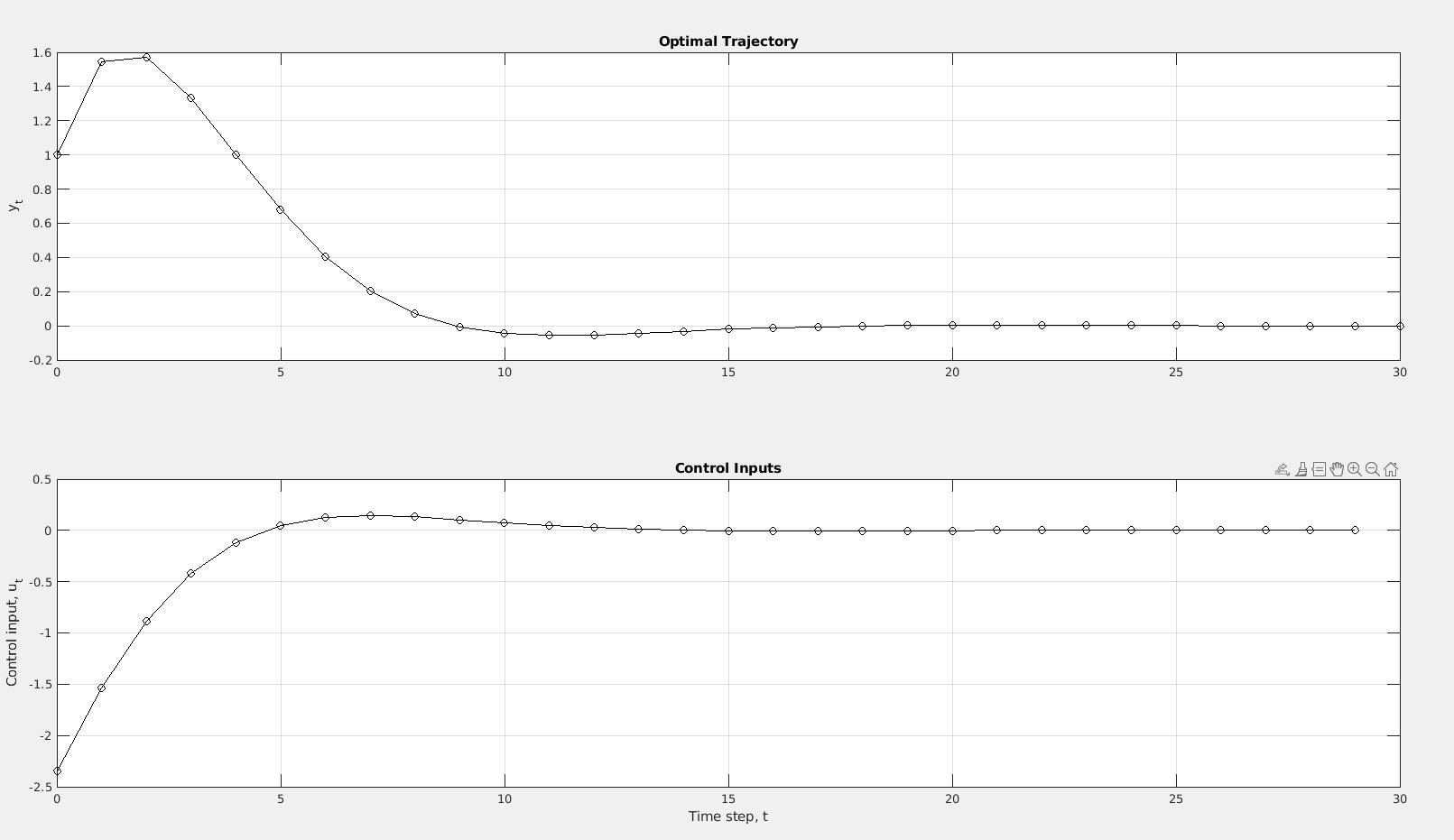

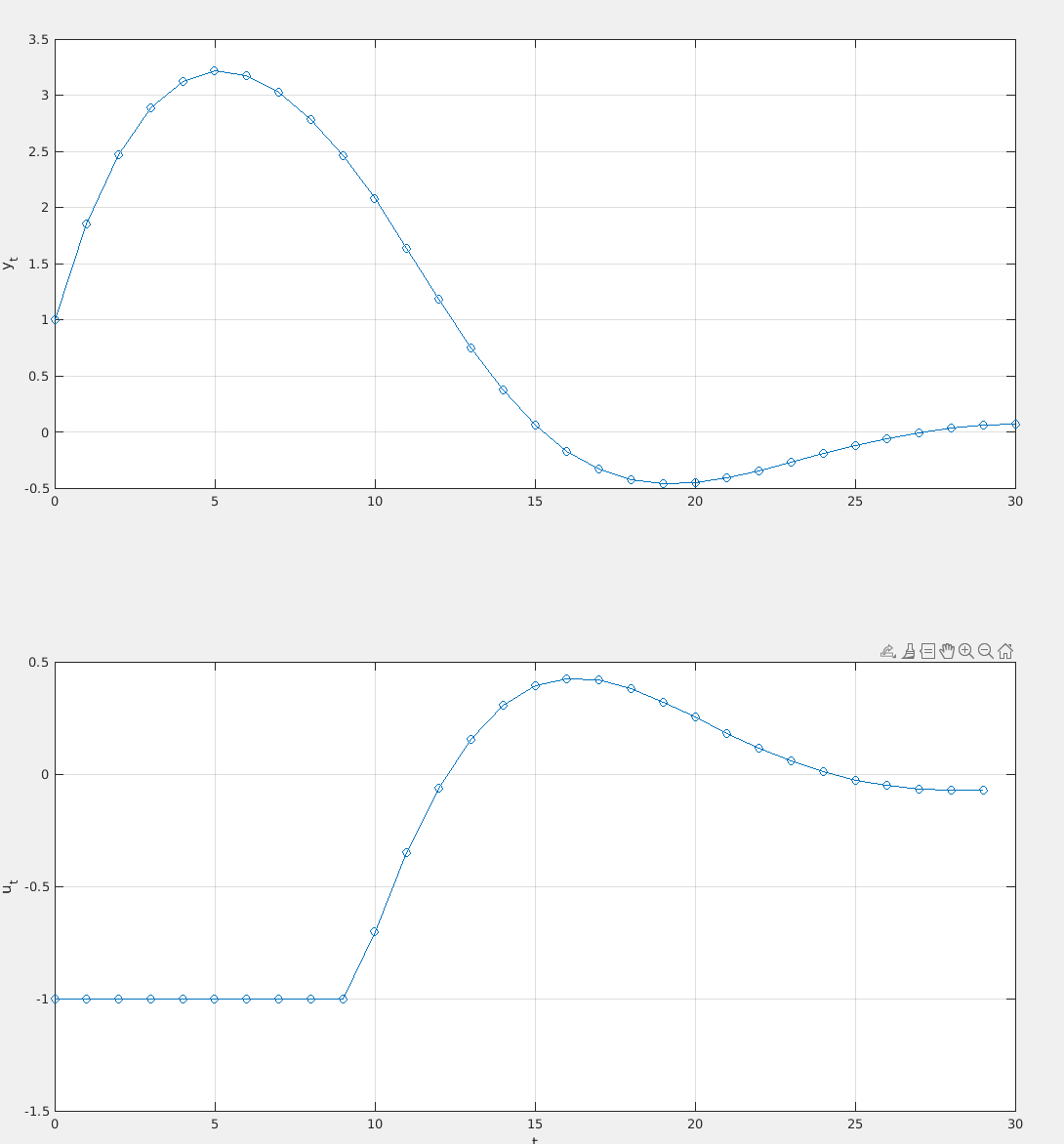

title('Control Inputs');Yielding the following plot

Why do we call this open-loop control? We call this for an open-loop control sequence due to not having new measurements after the system has started. Open-loop = no feedback.

What are the advantages of including feedback…? Including feedback into the system can make up for not having a perfect model (which is impossible irl) or other disturbances.

…and how can this be accomplished? We could include feedback by calculating the optimal path again after each new measurement. Although this would be compute heavy, it would also be way more accurate.

e)

While d) focused on solving the problem with the KKT system, we will in this sub task solve the QP directly using the quadprog function.

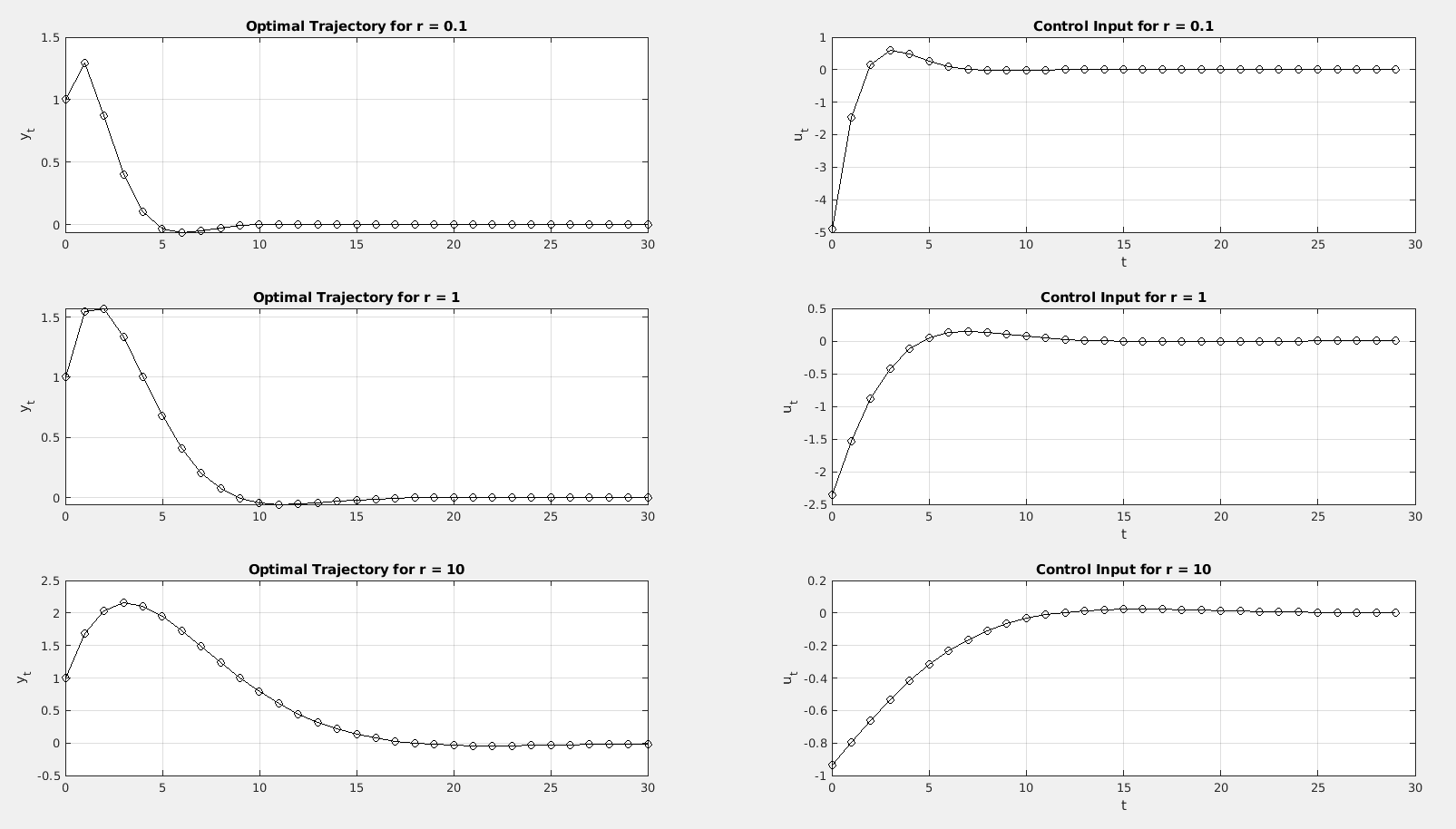

Modifying the script gives us the following figures with one iteration of quadprog

We see that for lower values of , we have more aggressive control due to decreasing the cost of using energy.

f)

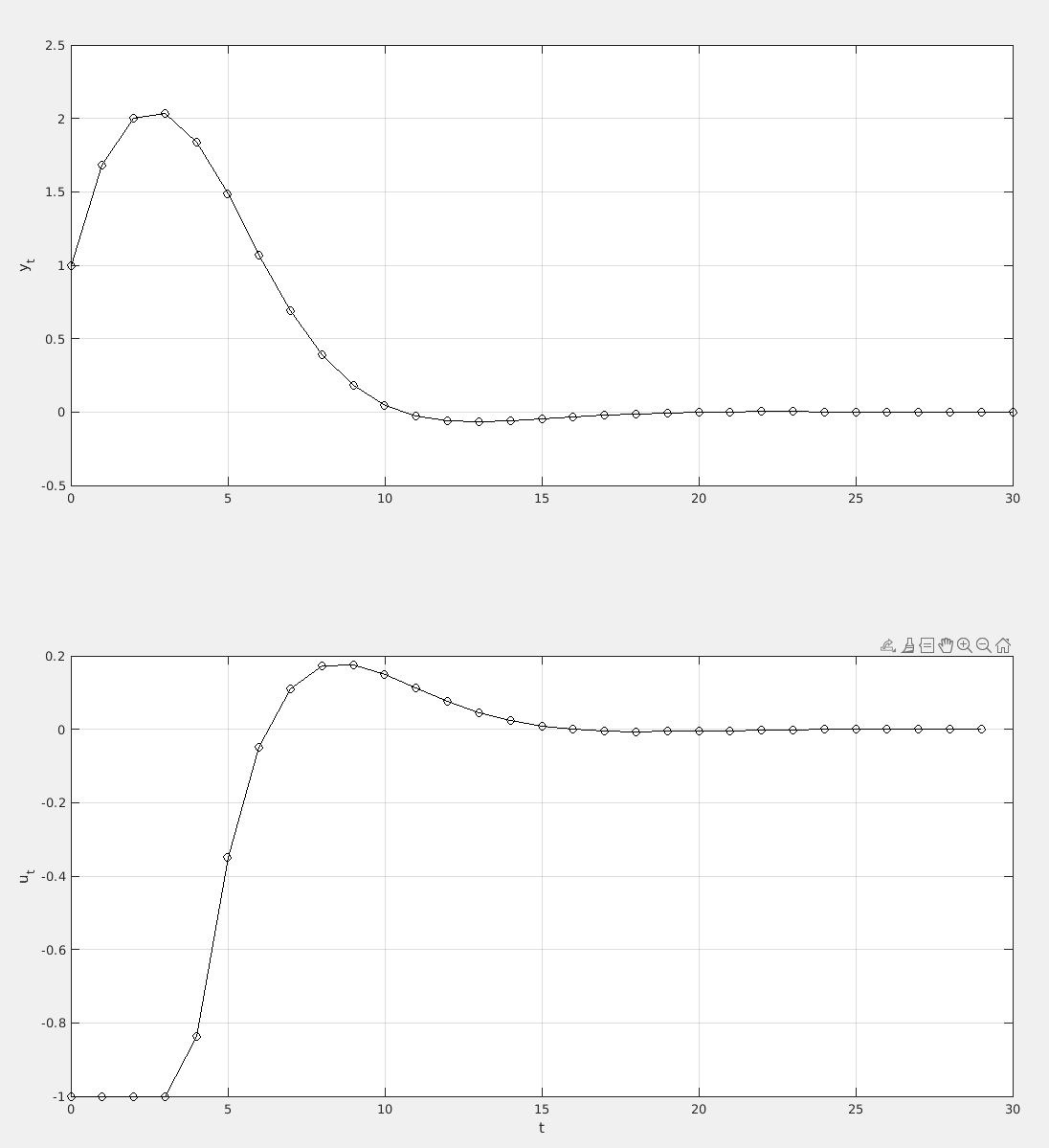

We adjust such that we have no constraints on (), and the following constraint for , .

This is done the following way.

% Inequality constraints

x_lb = -Inf(N*nx,1); % Lower bound on x

x_ub = Inf(N*nx,1); % Upper bound on x

u_lb = -ones(N*nu,1); % Lower bound on u

u_ub = ones(N*nu,1); % Upper bound on u

lb = [x_lb; u_lb]; % Lower bound on z

ub = [x_ub; u_ub]; % Upper bound on zAnd then lb and ub is passed to quadprog.

Which yields

Problem 2 (40%) Model Predictive Control (MPC)

a)

More technically, we calculate a finite horizon open-loop optimal control problem at each time instant, which is based on the current measurement.A way to control a process while satisfying the set of constraint. A model of the process is used to compute the control signals (inputs) that optimize predicted future process behavior.

Link to original

Link to original

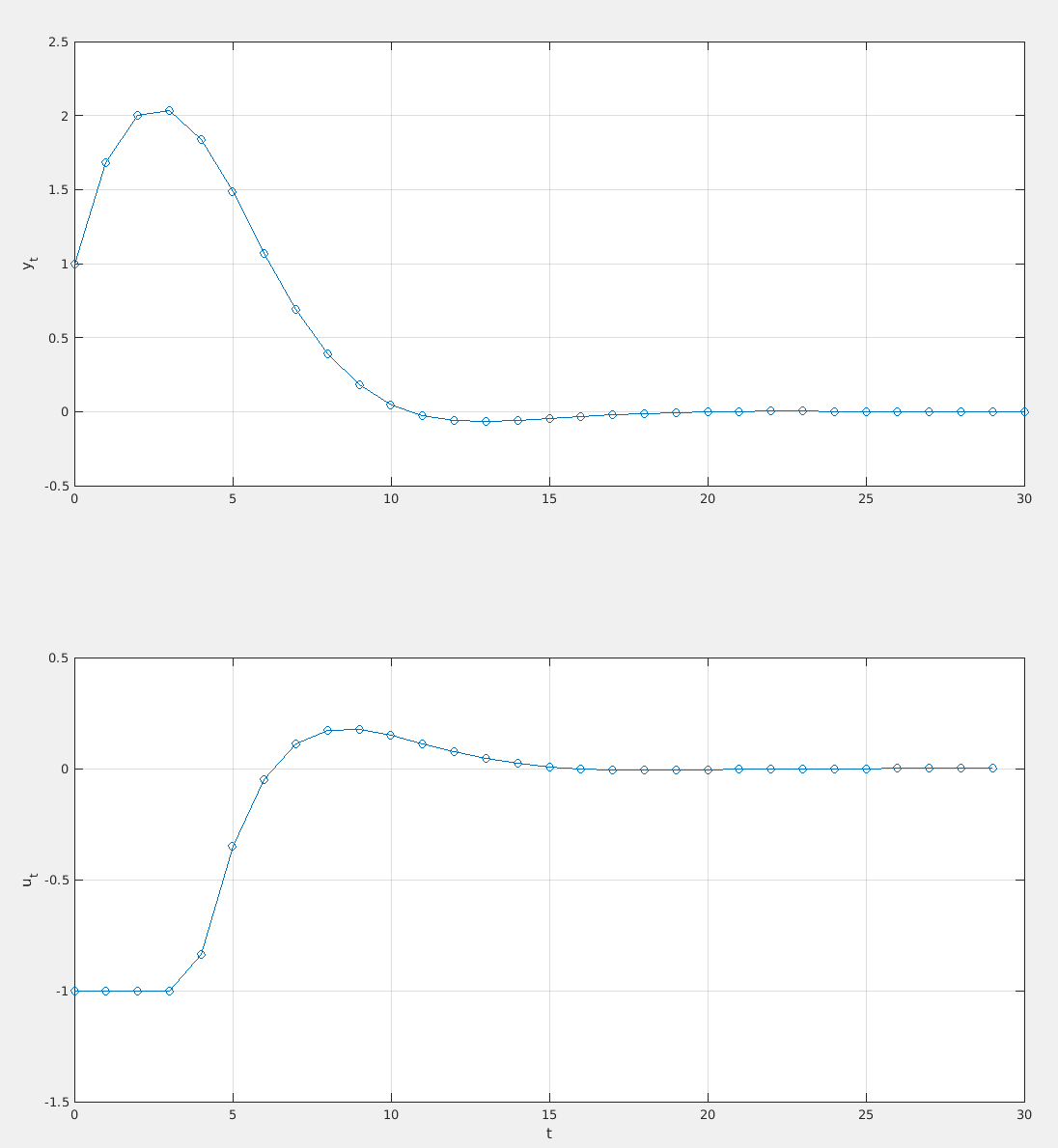

We set , which is our feedback. This is the reason our process looks like it does in the graph.

b)

The main difference is that we now iterate over every discrete time step, and calculate the optimization problem with .

Since we assumed a perfect model, the result is near identical

c)

Now, using the real plant instead, we see that the MPC still manages to reach the setpoint of , but not as good as when we had a perfect model.

This is done in the same way as b), but when moving a time step ahead, we extract the current state from the real plant, and not the model.

This is done in the same way as b), but when moving a time step ahead, we extract the current state from the real plant, and not the model.